Streamlining Autodesk Deployments: How CQi Simplifies the Installation Process

For many organisations, Autodesk software is mission-critical — used daily across design, engineering, and construction teams.

Essential though it is, most architects and engineers don't have the time to understand what lies beneath the IT systems and equipment they use on an everyday basis. This can also sometimes be the case for people who have been given the responsibilty of looking after it.

With technology continuously updating, it is difficult to keep up with the latest updates and ever-expanding jargon. This post is a continuation from our previous blog post which attempted to "lift the mist" on what lies beneath the systems and equipment used regularly by the industry and their importance in the world of design. We discussed personal computers (PCs), workstations and central processing units (CPU). This time we'll discuss memory, storage and graphics.

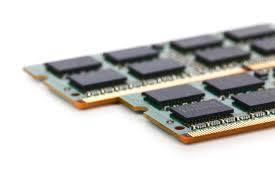

Memory

Temporary memory or Random Access Memory (RAM) is probably the most critical component next to the CPU. RAM, often referred to as memory, is a temporary form of memory allowing computer data and code to be stored whilst the machine is in use. RAM allows data to be read or written in almost the same amount of time irrespective of the physical location inside the memory. In contrast, other direct-access data storage media like CDs and hard drives can vary on the physical location.

Temporary memory or Random Access Memory (RAM) is probably the most critical component next to the CPU. RAM, often referred to as memory, is a temporary form of memory allowing computer data and code to be stored whilst the machine is in use. RAM allows data to be read or written in almost the same amount of time irrespective of the physical location inside the memory. In contrast, other direct-access data storage media like CDs and hard drives can vary on the physical location.

RAM is used because it is extremely fast to access for the reading and writing of data. As stated in our previous blog post, the only memory quicker than RAM in a system is the cache on the CPU chip and like cache, RAM is volatile meaning data will be lost in the event of a power loss.

A CPU can only be as quick as the data can be accessed, for each tick of the computers clock cycle (the internal mechanism that makes everything move along) that the CPU does not have data, that time is wasted. By having fast memory to serve data as quickly to the CPU as it can, less time is wasted and therefore better performance is seen.

RAM has changed over the years with Double Data Rate (DDR) being the standard since 2000. Since then we've had 4 generations with the latest being DDR4. Like the CPU, RAM has a number of variables to it and matching these with the CPU and workloads is important. The table below outlines this:

|

Type |

UDIMM |

UDIMM and RDIMM refer to unregistered and registered Dual In-line Memory Modules (DIMM) with the difference being the latter have a register between the RAM modules and the system's memory controller. The main reason for this feature is it places less electrical load on the memory controller and allow systems to remain stable with more RAM modules than they would have otherwise. |

|

Capacity |

4GB |

Capacity for RAM is now normally measured in Gigabytes (GB), this can differ from Hard Drive Disks which are now normally measured in Terabytes or 1,000 GB. |

|

Data Rate |

2666MHz or |

The data rate provides information about how much bandwidth the memory has or how much data can be transferred in any given time. This can be measured in MHz, MT/s (mega-transfers per second) or other means like a PC-xxxxx designation. These will always match with some conversions in place i.e. DDR4 memory marked as 2666Mhz or MT/s will always be a PC-21000. The PC part is the standard name and relates to MB/s (megabytes per second). Many memory articles have been written about this but simply put, the larger the number, the quicker the data rate. Contrastingly, this can also mean a higher latency (see below). |

|

Latency (CAS) |

CL15-15-15 |

Column Access Strobe or CAS is the delay time between data being requested to be accessed, and when it is available to be read. The interval is normally specified in clock cycles and therefore is variable based on the data rate, but it can also be based on time measured in nano-seconds. |

|

Channel |

Single |

Multiple memory channel architecture is a technology that increases the data transfer rate between the memory and the CPU by adding more channels of communication between them. Theoretically this multiplies the data rate by exactly the number of channels present i.e. if your CPU supports dual channels, then two sticks running at PC-21000 could provide up to 42GB of data per second. |

|

ECC |

ECC & Non-ECC |

ECC stands for Error Correction Code and basically allows the memory to detect and correct some RAM errors without user intervention. ECC replaced something called parity memory which only detected errors, not corrected. |

Storage Beyond dealing with instructions, a PC also needs to store data on a permanent basis i.e. when the machine is turned off. This could be user data, but also system data like the operating system and applications. The most common type of non-volatile memory, commonly known as storage, is the Hard Drive Disk (HDD), a mechanical piece of storage which has moving parts. Although one of the slowest types of storage, HDDs are cheap and can provide substantial amounts of space.

Beyond dealing with instructions, a PC also needs to store data on a permanent basis i.e. when the machine is turned off. This could be user data, but also system data like the operating system and applications. The most common type of non-volatile memory, commonly known as storage, is the Hard Drive Disk (HDD), a mechanical piece of storage which has moving parts. Although one of the slowest types of storage, HDDs are cheap and can provide substantial amounts of space.

The other common type of storage is called Solid State Disks (SSD) or Flash which is also a non-volatile type of storage, but does not have any mechanical moving parts. SSDs are much faster than HDDs but are more expensive. SSDs can come in different flavours allowing improved performance but as this increases, so does the cost. Like all components, they have their uses with workloads and budgets having to be considered.

All HDDs and SSDs have 4 key elements (apart from cost) to determine where each could be useful:

There are other things to note about HDDs and SSDs. For instance it is believed that once SSDs become as cheap as HDDs, they will replace all HDDs. However this is unlikely to make options simpler; with one technology going, others will come in.

With the same flash technology as SSDs which connect like a standard HDD, a device called M.2 SSD is becoming more prevalent in high performance devices like workstations as well as smaller devices like notebooks. M.2 SSDs remove the bottleneck caused by the original HDD connection or SATA by allowing a connection via the native PCI Express Lanes which are presented by the CPU. This means flash memory’s intense speeds can be accessed by the CPU through the least path of resistance or minimal conversions and chips.

Another thing to be aware of is RAM may be measured in GB like disks, but there are different versions of a gigabyte. You may have noticed you have a 500GB disk but when you add it to your machine for use you only have 465GB, where has the space gone? Well although some will be used by the system for configuration and settings, it is because disks are measured in true Gigabytes or 1000MB (megabytes), whilst RAM is measured in (GiB) Gibibytes or 1,024MB (Mebibytes).

If you wish to read more on this topic, click on the following links https://en.wikipedia.org/wiki/Gibibyte https://youtu.be/p3q5zWCw8J4.

Graphics

When it comes to the construction sector, the graphics can be as important as any other component due to the user 3D workloads. All graphics are provided via the Graphics Processing Unit (GPU) which is a computer chip that performs rapid mathematical calculations, primarily for the purpose of rendering images.

When it comes to the construction sector, the graphics can be as important as any other component due to the user 3D workloads. All graphics are provided via the Graphics Processing Unit (GPU) which is a computer chip that performs rapid mathematical calculations, primarily for the purpose of rendering images.

In the early days of computing, the CPU performed these calculations but as more graphics-intensive applications such as AutoCAD were developed, their demands put strain on the CPU and degraded performance. GPUs came about as a way to offload those tasks from CPUs, freeing up their processing power.

Now-a-days a separate GPU can be built into the CPU but for heavier 3D workloads, like AutoCAD and Revit, a separate GPU built on to a graphics card is required. Graphics cards come in varying forms and like all other components, have a number of variables and matching these with the workloads is important. As well as CPUs, RAM and storage, graphic cards come in workstation grades providing additional benefits over consumer units.

So let's understand the basics of the graphics card’s architecture first. A graphics card is like a mini computer in its own right with dedicated processor and memory and even in some very rare instances, having dedicated flash storage. Again, like other parts of a PC, the GPU is not as simple as a speed setting. Each manufacturer and card type bring their own take. Specifications (and their numbers) could include shader units, texture units, render output units (ROPs), memory data rate, memory bus, memory capacity and clock rate for the graphics processor.

So, what is the best way to determine what GPU is right? The key factor is the workload; some cards are better at certain jobs. An example would be Nvidia’s Quadro range of cards, these are built for professional industries and workloads such as design, advanced special effects and complex scientific visualisations. The best way to choose the right GPU is to firstly decide the principal workloads and this primarily involves deciding on the applications. From this the easiest way to look at what GPUs are right is looking at benchmarks.

So, what is the best way to determine what GPU is right? The key factor is the workload; some cards are better at certain jobs. An example would be Nvidia’s Quadro range of cards, these are built for professional industries and workloads such as design, advanced special effects and complex scientific visualisations. The best way to choose the right GPU is to firstly decide the principal workloads and this primarily involves deciding on the applications. From this the easiest way to look at what GPUs are right is looking at benchmarks.

With a benchmark, the most expensive cards will normally come out top, so other factors need to be taken in to account like how much performance is needed. In life, normally the most expensive item is the fastest but is it needed? A Ferrari is one of the fastest cars, but most people would find no use for them.

Another very important aspect is support, a lot of Independent Software Vendors (ISVs) will only support a select number of GPUs and these are normally the professional cards. The reason for this is the hardware vendors work closely with the ISVs to create specific GPUs that are thoroughly tested and made to fit the ISVs requirements. If you select a card not supported and you encounter an issue with the software, the ISV may not help until you change your setup.

How to Choose the Correct Systems and Equipment for your Business

Whilst this post and our previous blog post may have helped you understand the IT jargon used in the construction industry, how do you choose whick systems/equipment is best for your business?

Looking at benchmarks created by manufacturers or software vendors can be skewed as they may have interests elsewhere. At Symetri, we can run benchmarks for you and provide you with our workstation matrix which looks at all the latest Autodesk products and recommends the best in breed hardware for these workloads.

We also help customers with custom build systems, specific niche requirements will call for unusual hardware. We provide hardware not only for large teams of Revit, AutoCAD or Inventor users but for visualisers working on heavy renders, 3DS Max work and large or mobile VR setups.

To find out more about any of the terms mentioned in this blog post or for help with choosing the right systems/components for your business, please contact us

For many organisations, Autodesk software is mission-critical — used daily across design, engineering, and construction teams.

Discover how combining CQFlexMon with CQI provides a complete solution for CAD software deployment and monitoring. Improve software efficiency, reduce risk, and streamline your IT operations.

Cybercriminals are always evolving, and so should your defences. This month, we shine a spotlight on three often-overlooked yet critical security concerns that could put your business at risk.